The Fragile AI Outsourcing Trap: Why Smart Banks Are Building, Not Buying

by Yannis Larios

Monday, 08:10, Brussels.

The CFO of a mid-size payment service provider opens last month’s invoices. Fraud is down, card volume steady. Then she sees it: the “AI customer-care assistant” line item is 4× higher than forecast. No new seats, no feature add-ons—just usage. Every customer chat and document summary triggered paid additional calls to a foundation model. The vendor sells itself like Software-as-a-Service (SaaS), but the costs behave like metered utilities. The board meeting turns from “great demo!” to “do we have a kill switch?”

This scene is repeating across European boardrooms. Hundreds of startups are selling generative-AI “solutions” that look like traditional software but hide a dangerous secret: they’re just “AI wrappers”, a polished interface that forwards your data to someone else’s model and returns the result. When your customers love it, usage explodes—and so does your bill (and your vendor’s margin trouble). Enterprise AI will not behave like last decade’s software rollouts; it’s slower, messier, and costs scale with queries, not seats. For boards, that subtle sentence is the whole story.

Harvard Business Review (June 24, 2025) summed it up plainly: AI isn’t SaaS — costs scale with queries, not seats.

The Economics Trap Hidden in Plain Sight

Traditional SaaS was one kind of math. AI wrappers are another entirely.

Salesforce, Microsoft, and SAP built empires on elegant unit economics: high fixed costs to build software, near-zero variable costs to serve more users. Add another 10,000 seats? The server barely notices. That’s why mature SaaS companies post 80-90% gross margins at scale.

AI wrappers flip this model inside out. Every customer request—every email drafted, every document summarized, every task assumed; every fraud alert triaged, sanctions name screened, chargeback dispute pack assembled, —triggers a paid API call to OpenAI, Anthropic, or Google. Even with falling AI prices, each query still costs real money. There’s no margin magic unless they own the infrastructure.

Traditional SaaS vs AI Model Economics: Cost Per Unit by User Volume

Jasper and Copy.ai, early investor darlings that repackaged GPT outputs into friendly interfaces, were profiled at roughly 60% gross margins—respectable until you realize they’re essentially expensive middlemen. When usage spikes, upstream pricing changes, or platform owners bundle competing features (as OpenAI did with DevDay), that 60% can evaporate overnight.

By late 2023, Jasper cut its internal valuation amid slowing growth—textbook wrapper pressure. Meanwhile, Perplexity, which built its own retrieval architecture rather than pure wrapping, secured major distribution deals that pure wrappers rarely achieve. The pattern is simple: own the technology, gain leverage; wrap someone else’s tech, live on hope.

When AI Success Becomes Failure

Your AI wrapper vendor faces an impossible equation: success destroys profitability. A retail bank’s virtual assistant that once handled balance inquiries now navigates complex dispute flows and chargeback evidence gathering. A loan‑evaluation copilot that used to pre‑screen basic payroll now parses full application packs — PDFs, bank statements, open‑banking feeds — and runs affordability/ stress tests. Each “success” multiplies the vendor’s costs faster than revenue.

The dependency runs deeper than economics: your AI wrapper vendor is downstream of the model owner. If pricing, rate limits, safety filters, or latency change at the model/platform providers—e.g., OpenAI, Anthropic (Claude), Google Vertex AI (Gemini), or AWS Bedrock—your vendor can’t shield you. That dependency is not theoretical; it’s the operating reality of the whole layer. Boards that sign multiyear “AI SaaS” without usage caps, model optionality, or exit rights are buying volatility.

Builder.ai’s spectacular collapse in May 2025 crystallizes this vulnerability. Backed by Microsoft and major funds, the company entered insolvency amid revelations of inflated revenues and a human “AI” factory — about 700 engineers behind the AI facade — leaving many clients without access to code or data. Investigations and creditor filings followed — a cautionary tale for buyers dazzled by marketing promises.

The European Counter-Play: Building AI Sovereignty

Smart European institutions are choosing a different path, treating AI as critical infrastructure rather than a vendor relationship

Deutsche Bank (Germany) — Stack ownership for risk, fraud & service. Partnered with NVIDIA (2022) to build and run models on DB‑controlled infrastructure. Outcome: auditability, latency control, on‑prem/controlled‑cloud options. Why it matters: reduces third‑party fragility.

Bunq (Netherlands) — Accelerated fraud/AML & AI assistant. GPU‑optimized training (~100× faster) and Finn now handles >50% of support chats. Outcome: fewer false positives, faster iteration, lower ops cost. Why it matters: high‑frequency core tasks justify in‑house inference to avoid metered costs.

DKB (Germany) — Direct platform partnership (no wrapper). Strategic cooperation with OpenAI to embed assistants for ~5.8M customers. Outcome: enterprise controls and roadmap influence. Why it matters: if renting, rent from the model owner; avoid thin intermediaries.

CaixaBank (Spain) — Behavioural biometrics for instant payments. Partnered with Revelock to counter Bizum‑abuse scams. Outcome: earlier interdiction; reduced APP fraud. Why it matters: compressed decision windows demand first‑party signals and policy control.

Germany (Sovereign AI cloud) — Capacity & sovereignty. Deutsche Telekom + NVIDIA targeting ~10,000 GPUs for EU industry workloads. Outcome: regional capacity under EU law. Why it matters: runway for banks to run sensitive AI in‑region. [8]

The coming PSD rules tighten outsourcing and operational‑resilience expectations for PSPs and banks: critical third‑party arrangements must ensure continuity, audit/access rights, clear liability and documented exit/ portability; boards remain accountable for controls especially when AI services are outsourced.

The Three-Act Tragedy Every Board Should Recognize

Act I: The Demo

An AI vendor demonstrates near-magical capabilities: drafting compliance reports, answering customer queries, summarizing KYC documents, running anti-fraud tasks. The pilot works brilliantly. Customer satisfaction climbs. Procurement sees “SaaS” pricing and negotiates what looks like a standard annual contract.

Act II: The Adoption Cliff

Usage grows organically as product VPs, business unit directors, and client-support heads discover new applications. The vendor’s costs grow linearly with every request. They quietly push users to higher pricing tiers, throttle heavy usage, or switch to cheaper models to protect their margins. Your teams notice response quality variance and unexplained slowdowns during peak hours.

Act III: The Reckoning

Internal audit demands decision logs to meet AI governance requirements. The vendor can’t provide meaningful explainability because they don’t own the reasoning layer. Risk management notices the vendor’s most recent funding round stalled. Legal flags regulatory exposure. The board asks: “Can we switch?” and “Why didn’t we build the critical components ourselves?”

Your Strategic Response: Five Immediate Actions

1. Conduct Economics Due Diligence

Make vendors demonstrate unit economics at 2×, 5×, and 10× usage levels. What percentage of your fee goes to upstream API costs? How do their gross margins trend with volume? What happens if upstream prices increase? If they won’t show this data, you have your answer.

2. Own High-Impact, High-Frequency Use Cases

Plot your AI applications on frequency versus business impact. Anything in the high-high quadrant—fraud scoring, transaction monitoring, customer service automation—deserves an internal or co-owned solution path. Use managed GPUs, self-hosted inference, or direct partnerships with foundation model providers.

3. Rewrite Contracts for AI Reality

Traditional SaaS contracts don’t address metered AI services. Demand usage caps per month and per minute, step-down pricing at volume tiers, change-in-terms escape clauses if upstream providers raise prices beyond specified thresholds, model optionality rights, and comprehensive logging for governance compliance.

4. Maintain Shadow Capabilities

For every critical outsourced AI function, fund and setup a small internal prototype—even if it never replaces the vendor. This provides fallback options if vendors fail and forces your teams to understand the underlying data, latency, and quality trade-offs rather than outsourcing the strategic thinking.

5. Track the Right Metrics

Monitor AI gross margins (AI costs versus value created), cost-per-AI-action (per scored transaction, per resolved chat), and AI dependency ratios (concentration risk with any single provider). When metrics trend negatively for two consecutive months, route work to cheaper models or bring capabilities in-house.

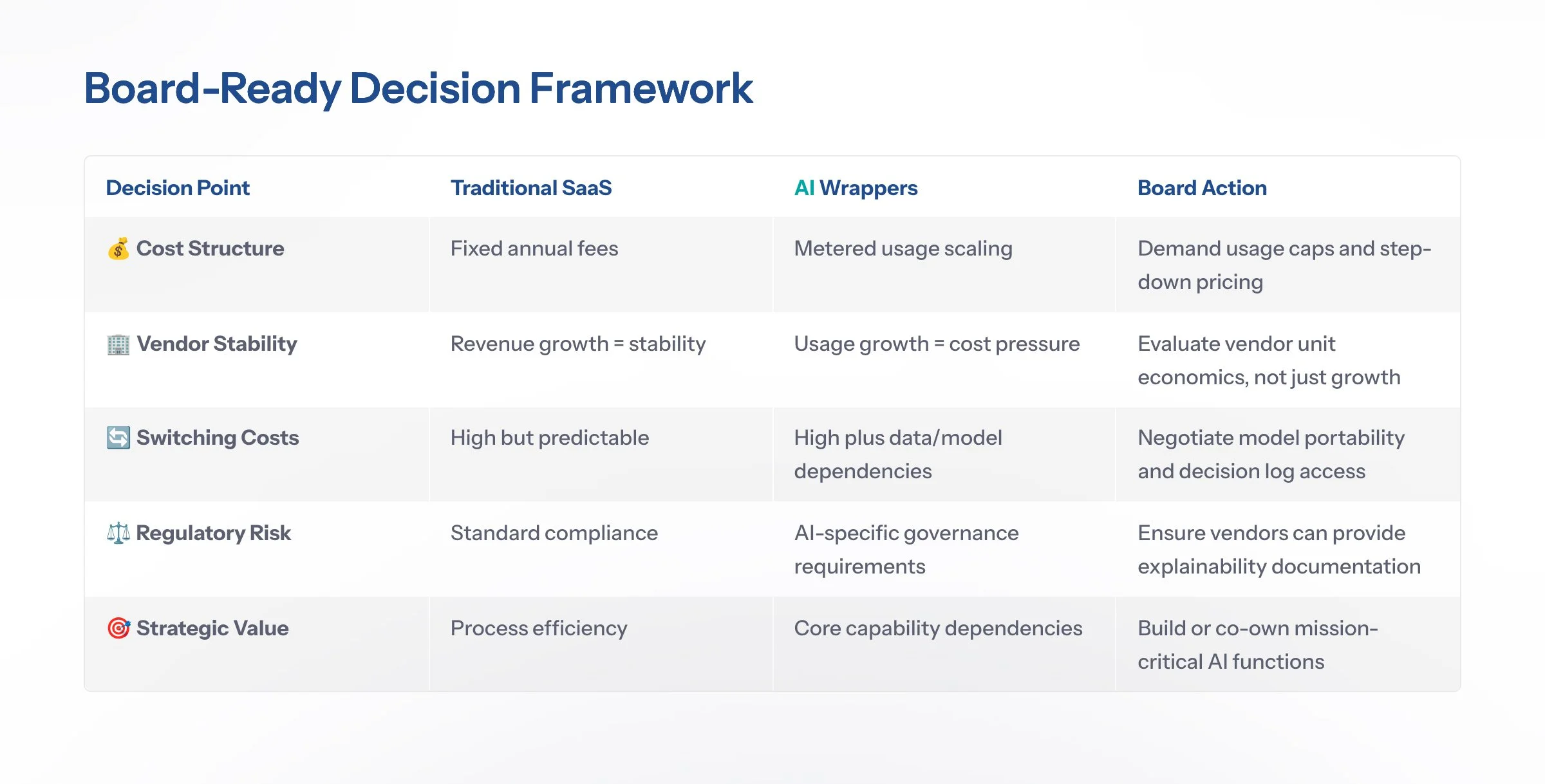

Board-Ready Decision Framework

The Strategic Choice That Defines Your Next Decade

European banks and payment service providers face a fundamental choice: treat AI as another software category to purchase, or recognize it as the new infrastructure layer that determines competitive advantage. The institutions building AI capabilities—not just buying them—will dominate the next decade of financial services.

Five Questions for Your Next Board Meeting:

Can our current AI vendors demonstrate sustainable unit economics at 10× our current usage?

Which AI functions are so critical that vendor failure would disrupt operations?

Do our AI contracts include usage caps, model optionality, and governance logging requirements?

What percentage of our AI capabilities could we replicate internally if vendors failed?

Are we building AI expertise or just buying AI outputs?

The answers will determine whether your institution leads or follows in the AI-native banking era. The CFO in Brussels at the opening example, learned this lesson the expensive way. Your board can choose the strategic path before the next invoice arrives.

If this resonates, please consider subscribing to “The Next Agenda”. For briefings or board-level discussions, feel free to reach out to me; Independent Non-Executive Director dialogues welcomed where my expertise adds value.

References

Harvard Business Review — “The AI Revolution Won’t Happen Overnight” (Jun 24, 2025).

Aieutics — “AI‑Native Metrics: Why Traditional SaaS Measurements Fail AI Startups” (Jul 4, 2025).

Sacra Research — “Jasper: The $72M ARR Google Suite of Generative AI” (Nov 17, 2022).

TechRadar — “Samsung Galaxy S26 could ship with Perplexity baked in” (Jun 2, 2025).

NVIDIA Blog — “bunq debunks financial fraud with GenAI (approx. 100× training)” (Jun 3, 2024).

Heise Online — “DKB cooperates with OpenAI” (May 6, 2025).

Deutsche Bank News — “Deutsche Bank Partners with NVIDIA to embed AI” (Dec 7, 2022).

Nasdaq (Hedder) — “Why Germany Is Building a Sovereign AI Cloud with Nvidia” (Jun 13, 2025).

CaixaBank — Press note: “CaixaBank, together with Revelock, is developing an AI solution to reinforce digital security” (2021).

Teradata and Constellation Research — Case studies: “Danske Bank fights fraud with ML and DL” (2018); Forbes — “Danske Bank Uses Tech To Prevent Digital Fraud” (2017).

Financial Times — “Spies, spinners, solicitors: Builder.ai’s ‘perfectly normal’ creditor list in full” (Jul 2025).

eWeek — “700 Engineers – Not AI – Developed Code at $1.5B Builder.ai; files for bankruptcy” (Jun 13, 2025).